Smparticle2 - Untitled

More Posts from Smparticle2 and Others

“I first ran for Congress in 1999, and I got beat. I just got whooped. I had been in the state legislature for a long time, I was in the minority party, I wasn’t getting a lot done, and I was away from my family and putting a lot of strain on Michelle. Then for me to run and lose that bad, I was thinking maybe this isn’t what I was cut out to do. I was forty years old, and I’d invested a lot of time and effort into something that didn’t seem to be working. But the thing that got me through that moment, and any other time that I’ve felt stuck, is to remind myself that it’s about the work. Because if you’re worrying about yourself—if you’re thinking: ‘Am I succeeding? Am I in the right position? Am I being appreciated?’ – then you’re going to end up feeling frustrated and stuck. But if you can keep it about the work, you’ll always have a path. There’s always something to be done.”

How we determine who’s to blame

How do people assign a cause to events they witness? Some philosophers have suggested that people determine responsibility for a particular outcome by imagining what would have happened if a suspected cause had not intervened.

This kind of reasoning, known as counterfactual simulation, is believed to occur in many situations. For example, soccer referees deciding whether a player should be credited with an “own goal” — a goal accidentally scored for the opposing team — must try to determine what would have happened had the player not touched the ball.

This process can be conscious, as in the soccer example, or unconscious, so that we are not even aware we are doing it. Using technology that tracks eye movements, cognitive scientists at MIT have now obtained the first direct evidence that people unconsciously use counterfactual simulation to imagine how a situation could have played out differently.

“This is the first time that we or anybody have been able to see those simulations happening online, to count how many a person is making, and show the correlation between those simulations and their judgments,” says Josh Tenenbaum, a professor in MIT’s Department of Brain and Cognitive Sciences, a member of MIT’s Computer Science and Artificial Intelligence Laboratory, and the senior author of the new study.

Tobias Gerstenberg, a postdoc at MIT who will be joining Stanford’s Psychology Department as an assistant professor next year, is the lead author of the paper, which appears in the Oct. 17 issue of Psychological Science. Other authors of the paper are MIT postdoc Matthew Peterson, Stanford University Associate Professor Noah Goodman, and University College London Professor David Lagnado.

Follow the ball

Until now, studies of counterfactual simulation could only use reports from people describing how they made judgments about responsibility, which offered only indirect evidence of how their minds were working.

Gerstenberg, Tenenbaum, and their colleagues set out to find more direct evidence by tracking people’s eye movements as they watched two billiard balls collide. The researchers created 18 videos showing different possible outcomes of the collisions. In some cases, the collision knocked one of the balls through a gate; in others, it prevented the ball from doing so.

Before watching the videos, some participants were told that they would be asked to rate how strongly they agreed with statements related to ball A’s effect on ball B, such as, “Ball A caused ball B to go through the gate.” Other participants were asked simply what the outcome of the collision was.

As the subjects watched the videos, the researchers were able to track their eye movements using an infrared light that reflects off the pupil and reveals where the eye is looking. This allowed the researchers, for the first time, to gain a window into how the mind imagines possible outcomes that did not occur.

“What’s really cool about eye tracking is it lets you see things that you’re not consciously aware of,” Tenenbaum says. “When psychologists and philosophers have proposed the idea of counterfactual simulation, they haven’t necessarily meant that you do this consciously. It’s something going on behind the surface, and eye tracking is able to reveal that.”

The researchers found that when participants were asked questions about ball A’s effect on the path of ball B, their eyes followed the course that ball B would have taken had ball A not interfered. Furthermore, the more uncertainty there was as to whether ball A had an effect on the outcome, the more often participants looked toward ball B’s imaginary trajectory.

“It’s in the close cases where you see the most counterfactual looks. They’re using those looks to resolve the uncertainty,” Tenenbaum says.

Participants who were asked only what the actual outcome had been did not perform the same eye movements along ball B’s alternative pathway.

“The idea that causality is based on counterfactual thinking is an idea that has been around for a long time, but direct evidence is largely lacking,” says Phillip Wolff, an associate professor of psychology at Emory University, who was not involved in the research. “This study offers more direct evidence for that view.”

(Image caption: In this video, two participants’ eye-movements are tracked while they watch a video clip. The blue dot indicates where each participant is looking on the screen. The participant on the left was asked to judge whether they thought that ball B went through the middle of the gate. Participants asked this question mostly looked at the balls and tried to predict where ball B would go. The participant on the right was asked to judge whether ball A caused ball B to go through the gate. Participants asked this question tried to simulate where ball B would have gone if ball A hadn’t been present in the scene. Credit: Tobias Gerstenberg)

How people think

The researchers are now using this approach to study more complex situations in which people use counterfactual simulation to make judgments of causality.

“We think this process of counterfactual simulation is really pervasive,” Gerstenberg says. “In many cases it may not be supported by eye movements, because there are many kinds of abstract counterfactual thinking that we just do in our mind. But the billiard-ball collisions lead to a particular kind of counterfactual simulation where we can see it.”

One example the researchers are studying is the following: Imagine ball C is headed for the gate, while balls A and B each head toward C. Either one could knock C off course, but A gets there first. Is B off the hook, or should it still bear some responsibility for the outcome?

“Part of what we are trying to do with this work is get a little bit more clarity on how people deal with these complex cases. In an ideal world, the work we’re doing can inform the notions of causality that are used in the law,” Gerstenberg says. “There is quite a bit of interaction between computer science, psychology, and legal science. We’re all in the same game of trying to understand how people think about causation.”

When a porous solid retains its properties in liquid form

Known for their exceptional porosity that enables the trapping or transport of molecules, metal-organic frameworks (MOFs) take the form of a powder, which makes them difficult to format. For the first time, an international team led by scientists from the Institut de recherche de Chimie Paris (CNRS/Chimie ParisTech ), and notably involving Air Liquide, has evidenced the surprising ability of a type of MOF to retain its porous properties in the liquid and then glass state. Published on October 9, 2017 in Nature Materials website, these findings open the way towards new industrial applications.

Metal-organic frameworks (MOFs) constitute a particularly promising class of materials. Their exceptional porosity makes it possible to store and separate large quantities of gas, or to act as a catalyst for chemical reactions. However, their crystalline structure implies that they are produced in powder form, which is difficult to store and use for industrial applications. For the first time, a team of scientists from the CNRS, Chimie ParisTech, Cambridge University, Air Liquide and the ISIS (UK) and Argonne (US) synchrotrons has shown that the properties of a zeolitic MOF were unexpectedly conserved in the liquid phase (which does not generally favor porosity). Then, after cooling and solidification, the glass obtained adopted a disordered, non-crystalline structure that also retained the same properties in terms of porosity. These results will enable the shaping and use of these materials much more efficiently than in powder form.

Read more.

Moonlight

That Moment… My Heart…

(Photo Credit: Kenneth Jarecke/Contact Press Image)

Golden Gate Bridge by Jason Jko

All these beautiful scenes and all I could think was "LOOK AT ALL THE SCATTERING" :')

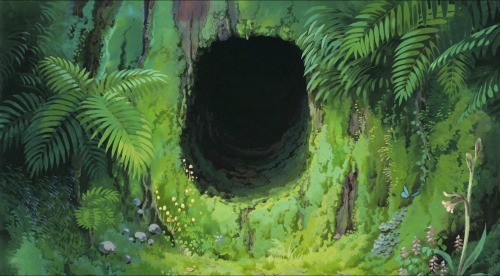

More Art of My Neighbor Totoro - Art Direction by Kazuo Oga (1988)

Balancing Time and Space in the Brain: A New Model Holds Promise for Predicting Brain Dynamics

For as long as scientists have been listening in on the activity of the brain, they have been trying to understand the source of its noisy, apparently random, activity. In the past 20 years, “balanced network theory” has emerged to explain this apparent randomness through a balance of excitation and inhibition in recurrently coupled networks of neurons. A team of scientists has extended the balanced model to provide deep and testable predictions linking brain circuits to brain activity.

Lead investigators at the University of Pittsburgh say the new model accurately explains experimental findings about the highly variable responses of neurons in the brains of living animals. On Oct. 31, their paper, “The spatial structure of correlated neuronal variability,” was published online by the journal Nature Neuroscience.

The new model provides a much richer understanding of how activity is coordinated between neurons in neural circuits. The model could be used in the future to discover neural “signatures” that predict brain activity associated with learning or disease, say the investigators.

“Normally, brain activity appears highly random and variable most of the time, which looks like a weird way to compute,” said Brent Doiron, associate professor of mathematics at Pitt, senior author on the paper, and a member of the University of Pittsburgh Brain Institute (UPBI). “To understand the mechanics of neural computation, you need to know how the dynamics of a neuronal network depends on the network’s architecture, and this latest research brings us significantly closer to achieving this goal.”

Earlier versions of the balanced network theory captured how the timing and frequency of inputs—excitatory and inhibitory—shaped the emergence of variability in neural behavior, but these models used shortcuts that were biologically unrealistic, according to Doiron.

“The original balanced model ignored the spatial dependence of wiring in the brain, but it has long been known that neuron pairs that are near one another have a higher likelihood of connecting than pairs that are separated by larger distances. Earlier models produced unrealistic behavior—either completely random activity that was unlike the brain or completely synchronized neural behavior, such as you would see in a deep seizure. You could produce nothing in between.”

In the context of this balance, neurons are in a constant state of tension. According to co-author Matthew Smith, assistant professor of ophthalmology at Pitt and a member of UPBI, “It’s like balancing on one foot on your toes. If there are small overcorrections, the result is big fluctuations in neural firing, or communication.”

The new model accounts for temporal and spatial characteristics of neural networks and the correlations in the activity between neurons—whether firing in one neuron is correlated with firing in another. The model is such a substantial improvement that the scientists could use it to predict the behavior of living neurons examined in the area of the brain that processes the visual world.

After developing the model, the scientists examined data from the living visual cortex and found that their model accurately predicted the behavior of neurons based on how far apart they were. The activity of nearby neuron pairs was strongly correlated. At an intermediate distance, pairs of neurons were anticorrelated (When one responded more, the other responded less.), and at greater distances still they were independent.

“This model will help us to better understand how the brain computes information because it’s a big step forward in describing how network structure determines network variability,” said Doiron. “Any serious theory of brain computation must take into account the noise in the code. A shift in neuronal variability accompanies important cognitive functions, such as attention and learning, as well as being a signature of devastating pathologies like Parkinson’s disease and epilepsy.”

While the scientists examined the visual cortex, they believe their model could be used to predict activity in other parts of the brain, such as areas that process auditory or olfactory cues, for example. And they believe that the model generalizes to the brains of all mammals. In fact, the team found that a neural signature predicted by their model appeared in the visual cortex of living mice studied by another team of investigators.

“A hallmark of the computational approach that Doiron and Smith are taking is that its goal is to infer general principles of brain function that can be broadly applied to many scenarios. Remarkably, we still don’t have things like the laws of gravity for understanding the brain, but this is an important step for providing good theories in neuroscience that will allow us to make sense of the explosion of new experimental data that can now be collected,” said Nathan Urban, associate director of UPBI.

-

darimoza liked this · 4 years ago

darimoza liked this · 4 years ago -

mark-beaks-is-best-parrot liked this · 4 years ago

mark-beaks-is-best-parrot liked this · 4 years ago -

owlintheemptiness liked this · 4 years ago

owlintheemptiness liked this · 4 years ago -

mimi-the-loser liked this · 4 years ago

mimi-the-loser liked this · 4 years ago -

zillaofcybertron liked this · 4 years ago

zillaofcybertron liked this · 4 years ago -

lobsterloverrr liked this · 4 years ago

lobsterloverrr liked this · 4 years ago -

theshiniestfurret liked this · 4 years ago

theshiniestfurret liked this · 4 years ago -

thesusbunny liked this · 4 years ago

thesusbunny liked this · 4 years ago -

moorewai liked this · 4 years ago

moorewai liked this · 4 years ago -

omega-twin liked this · 4 years ago

omega-twin liked this · 4 years ago -

theleagueof9idiots liked this · 4 years ago

theleagueof9idiots liked this · 4 years ago -

leafkid96 liked this · 4 years ago

leafkid96 liked this · 4 years ago -

chimeleyh liked this · 4 years ago

chimeleyh liked this · 4 years ago -

iiwenttocollege liked this · 4 years ago

iiwenttocollege liked this · 4 years ago -

sketchywasteland liked this · 4 years ago

sketchywasteland liked this · 4 years ago -

dvst8u liked this · 4 years ago

dvst8u liked this · 4 years ago -

alltingfinns reblogged this · 4 years ago

alltingfinns reblogged this · 4 years ago -

alltingfinns liked this · 4 years ago

alltingfinns liked this · 4 years ago -

radiofreekonoha reblogged this · 4 years ago

radiofreekonoha reblogged this · 4 years ago -

blue-power1 reblogged this · 4 years ago

blue-power1 reblogged this · 4 years ago -

blue-power1 liked this · 4 years ago

blue-power1 liked this · 4 years ago -

biomak liked this · 4 years ago

biomak liked this · 4 years ago -

maswartz reblogged this · 4 years ago

maswartz reblogged this · 4 years ago -

tinyflower49 liked this · 4 years ago

tinyflower49 liked this · 4 years ago -

smell-colours-of-rainbow liked this · 4 years ago

smell-colours-of-rainbow liked this · 4 years ago -

the-hate-keeps-me-warm liked this · 4 years ago

the-hate-keeps-me-warm liked this · 4 years ago -

rosiethecow liked this · 4 years ago

rosiethecow liked this · 4 years ago -

pedanticat-reblogs-zone reblogged this · 4 years ago

pedanticat-reblogs-zone reblogged this · 4 years ago -

pedanticat liked this · 4 years ago

pedanticat liked this · 4 years ago -

torontofarmboi liked this · 4 years ago

torontofarmboi liked this · 4 years ago -

deb0o reblogged this · 4 years ago

deb0o reblogged this · 4 years ago -

deb0o liked this · 4 years ago

deb0o liked this · 4 years ago -

thetinkeringhiccup liked this · 4 years ago

thetinkeringhiccup liked this · 4 years ago -

goodchaos liked this · 4 years ago

goodchaos liked this · 4 years ago -

fakefennec liked this · 4 years ago

fakefennec liked this · 4 years ago -

asksoulcrystal reblogged this · 4 years ago

asksoulcrystal reblogged this · 4 years ago -

asksoulcrystal liked this · 4 years ago

asksoulcrystal liked this · 4 years ago -

bowser14456 reblogged this · 4 years ago

bowser14456 reblogged this · 4 years ago -

bowser14456 liked this · 4 years ago

bowser14456 liked this · 4 years ago -

ko-chan-the-blueberry-donut liked this · 4 years ago

ko-chan-the-blueberry-donut liked this · 4 years ago -

quaintdoodles reblogged this · 4 years ago

quaintdoodles reblogged this · 4 years ago -

quaintdoodles liked this · 4 years ago

quaintdoodles liked this · 4 years ago -

darkeningrose24 liked this · 4 years ago

darkeningrose24 liked this · 4 years ago -

kie-kois liked this · 4 years ago

kie-kois liked this · 4 years ago -

ewensdraws liked this · 4 years ago

ewensdraws liked this · 4 years ago -

emu-lumberjack liked this · 4 years ago

emu-lumberjack liked this · 4 years ago