This Terrifying Eel-robot Will Perform Maintenance On Undersea Equipment

This terrifying eel-robot will perform maintenance on undersea equipment

Nope.

More Posts from Science-is-magical and Others

why do we have butt cheeks i dont understand why did we evolve this way

what use do butt cheeks have

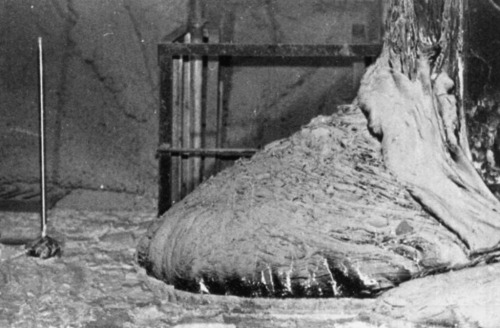

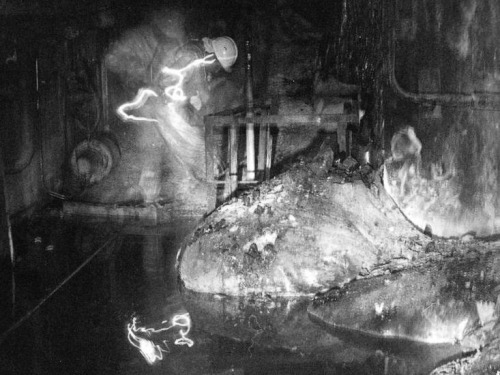

One of the most dangerous pictures ever taken - Elephant’s Foot, Chernobyl. This is a photo of a now dead man next the ‘Elephant’ Foot’ at the Chernobyl power plant.

The image distortions in the photo are created by intense level of radiation almost beyond comprehension. There is no way the person in this photo and the person photographing him could have survived for any more that a few years after being there, even if they quickly ran in, took the photos and ran out again. This photo would be impossible to take today as the rates of radioactive decay are even more extreme now due to a failed military experiment to bomb the reactor core with neuron absorbers. The foot is made up of a small percentage of uranium with the bulk mostly melted sand, concrete and other materials which the molten corium turns into a kind of lava flow. In recent years, it has destroyed a robot which tried to approach it, and the last photos were taken via a mirror mounted to a pole held at the other end of the corridor for a few seconds. It is almost certainly the most dangerous and unstable creation made by humans. These are the effects of exposure: 30 seconds of exposure - dizziness and fatigue a week later 2 minutes of exposure - cells begin to hemorrhage (ruptured blood vessels) 4 minutes - vomiting, diarrhea, and fever 300 seconds - two days to live

From vision to hand action

Our hands are highly developed grasping organs that are in continuous use. Long before we stir our first cup of coffee in the morning, our hands have executed a multitude of grasps. Directing a pen between our thumb and index finger over a piece of paper with absolute precision appears as easy as catching a ball or operating a doorknob. The neuroscientists Stefan Schaffelhofer and Hansjörg Scherberger of the German Primate Center (DPZ) have studied how the brain controls the different grasping movements. In their research with rhesus macaques, it was found that the three brain areas AIP, F5 and M1 that are responsible for planning and executing hand movements, perform different tasks within their neural network. The AIP area is mainly responsible for processing visual features of objects, such as their size and shape. This optical information is translated into motor commands in the F5 area. The M1 area is ultimately responsible for turning this motor commands into actions. The results of the study contribute to the development of neuroprosthetics that should help paralyzed patients to regain their hand functions (eLife, 2016).

The three brain areas AIP, F5 and M1 lay in the cerebral cortex and form a neural network responsible for translating visual properties of an object into a corresponding hand movement. Until now, the details of how this “visuomotor transformation” are performed have been unclear. During the course of his PhD thesis at the German Primate Center, neuroscientist Stefan Schaffelhofer intensively studied the neural mechanisms that control grasping movements. “We wanted to find out how and where visual information about grasped objects, for example their shape or size, and motor characteristics of the hand, like the strength and type of a grip, are processed in the different grasp-related areas of the brain”, says Schaffelhofer.

For this, two rhesus macaques were trained to repeatedly grasp 50 different objects. At the same time, the activity of hundreds of nerve cells was measured with so-called microelectrode arrays. In order to compare the applied grip types with the neural signals, the monkeys wore an electromagnetic data glove that recorded all the finger and hand movements. The experimental setup was designed to individually observe the phases of the visuomotor transformation in the brain, namely the processing of visual object properties, the motion planning and execution. For this, the scientists developed a delayed grasping task. In order for the monkey to see the object, it was briefly lit before the start of the grasping movement. The subsequent movement took place in the dark with a short delay. In this way, visual and motor signals of neurons could be examined separately.

The results show that the AIP area is primarily responsible for the processing of visual object features. “The neurons mainly respond to the three-dimensional shape of different objects”, says Stefan Schaffelhofer. “Due to the different activity of the neurons, we could precisely distinguish as to whether the monkeys had seen a sphere, cube or cylinder. Even abstract object shapes could be differentiated based on the observed cell activity.”

In contrast to AIP, area F5 and M1 did not represent object geometries, but the corresponding hand configurations used to grasp the objects. The information of F5 and M1 neurons indicated a strong resemblance to the hand movements recorded with the data glove. “In our study we were able to show where and how visual properties of objects are converted into corresponding movement commands”, says Stefan Schaffelhofer. “In this process, the F5 area plays a central role in visuomotor transformation. Its neurons receive direct visual object information from AIP and can translate the signals into motor plans that are then executed in M1. Thus, area F5 has contact to both, the visual and motor part of the brain.”

Knowledge of how to control grasp movements is essential for the development of neuronal hand prosthetics. “In paraplegic patients, the connection between the brain and limbs is no longer functional. Neural interfaces can replace this functionality”, says Hansjörg Scherberger, head of the Neurobiology Laboratory at the DPZ. “They can read the motor signals in the brain and use them for prosthetic control. In order to program these interfaces properly, it is crucial to know how and where our brain controls the grasping movements”. The findings of this study will facilitate to new neuroprosthetic applications that can selectively process the areas’ individual information in order to improve their usability and accuracy.

Paint colors designed by neural network, Part 2

So it turns out you can train a neural network to generate paint colors if you give it a list of 7,700 Sherwin-Williams paint colors as input. How a neural network basically works is it looks at a set of data - in this case, a long list of Sherwin-Williams paint color names and RGB (red, green, blue) numbers that represent the color - and it tries to form its own rules about how to generate more data like it.

Last time I reported results that were, well… mixed. The neural network produced colors, all right, but it hadn’t gotten the hang of producing appealing names to go with them - instead producing names like Rose Hork, Stanky Bean, and Turdly. It also had trouble matching names to colors, and would often produce an “Ice Gray” that was a mustard yellow, for example, or a “Ferry Purple” that was decidedly brown.

These were not great names.

There are lots of things that affect how well the algorithm does, however.

One simple change turns out to be the “temperature” (think: creativity) variable, which adjusts whether the neural network always picks the most likely next character as it’s generating text, or whether it will go with something farther down the list. I had the temperature originally set pretty high, but it turns out that when I turn it down ever so slightly, the algorithm does a lot better. Not only do the names better match the colors, but it begins to reproduce color gradients that must have been in the original dataset all along. Colors tend to be grouped together in these gradients, so it shifts gradually from greens to browns to blues to yellows, etc. and does eventually cover the rainbow, not just beige.

Apparently it was trying to give me better results, but I kept screwing it up.

Raw output from RGB neural net, now less-annoyed by my temperature setting

People also sent in suggestions on how to improve the algorithm. One of the most-frequent was to try a different way of representing color - it turns out that RGB (with a single color represented by the amount of Red, Green, and Blue in it) isn’t very well matched to the way human eyes perceive color.

These are some results from a different color representation, known as HSV. In HSV representation, a single color is represented by three numbers like in RGB, but this time they stand for Hue, Saturation, and Value. You can think of the Hue number as representing the color, Saturation as representing how intense (vs gray) the color is, and Value as representing the brightness. Other than the way of representing the color, everything else about the dataset and the neural network are the same. (char-rnn, 512 neurons and 2 layers, dropout 0.8, 50 epochs)

Raw output from HSV neural net:

And here are some results from a third color representation, known as LAB. In this color space, the first number stands for lightness, the second number stands for the amount of green vs red, and the third number stands for the the amount of blue vs yellow.

Raw output from LAB neural net:

It turns out that the color representation doesn’t make a very big difference in how good the results are (at least as far as I can tell with my very simple experiment). RGB seems to be surprisingly the best able to reproduce the gradients from the original dataset - maybe it’s more resistant to disruption when the temperature setting introduces randomness.

And the color names are pretty bad, no matter how the colors themselves are represented.

However, a blog reader compiled this dataset, which has paint colors from other companies such as Behr and Benjamin Moore, as well as a bunch of user-submitted colors from a big XKCD survey. He also changed all the names to lowercase, so the neural network wouldn’t have to learn two versions of each letter.

And the results were… surprisingly good. Pretty much every name was a plausible match to its color (even if it wasn’t a plausible color you’d find in the paint store). The answer seems to be, as it often is for neural networks: more data.

Raw output using The Big RGB Dataset:

I leave you with the Hall of Fame:

RGB:

HSV:

LAB:

Big RGB dataset:

Wait, people are mad that it's blurry? Isn't that black hole in another galaxy????

It’s literally like 55 million light years away

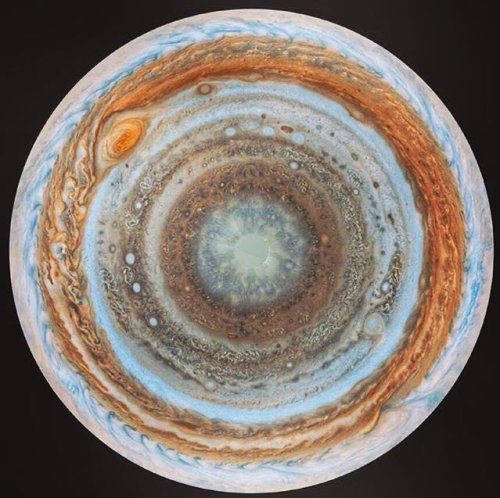

Timelapse of Europa & Io orbiting Jupiter, shot from Cassini during its flyby of Jupiter

-

raidendefender reblogged this · 4 weeks ago

raidendefender reblogged this · 4 weeks ago -

raidendefender liked this · 4 weeks ago

raidendefender liked this · 4 weeks ago -

kanawolf liked this · 1 month ago

kanawolf liked this · 1 month ago -

huricranes reblogged this · 1 month ago

huricranes reblogged this · 1 month ago -

hellspawnartandblog liked this · 2 months ago

hellspawnartandblog liked this · 2 months ago -

the-archivist-raven liked this · 2 months ago

the-archivist-raven liked this · 2 months ago -

shaddow-reblogz reblogged this · 3 months ago

shaddow-reblogz reblogged this · 3 months ago -

shadsmidnightthoughts liked this · 3 months ago

shadsmidnightthoughts liked this · 3 months ago -

the-not-witch-time-forgot reblogged this · 3 months ago

the-not-witch-time-forgot reblogged this · 3 months ago -

dpd-genrefictionbiblioholic liked this · 4 months ago

dpd-genrefictionbiblioholic liked this · 4 months ago -

fuckingonthesubway liked this · 5 months ago

fuckingonthesubway liked this · 5 months ago -

namelessman2 liked this · 5 months ago

namelessman2 liked this · 5 months ago -

lore45 reblogged this · 8 months ago

lore45 reblogged this · 8 months ago -

lore45 liked this · 8 months ago

lore45 liked this · 8 months ago -

cherax-pulcher liked this · 9 months ago

cherax-pulcher liked this · 9 months ago -

cheetorolo liked this · 10 months ago

cheetorolo liked this · 10 months ago -

kuni-dreamer liked this · 11 months ago

kuni-dreamer liked this · 11 months ago -

nataliateresa42 liked this · 11 months ago

nataliateresa42 liked this · 11 months ago -

haoeu liked this · 1 year ago

haoeu liked this · 1 year ago -

catsinspacesuits reblogged this · 1 year ago

catsinspacesuits reblogged this · 1 year ago -

catsinspacesuits liked this · 1 year ago

catsinspacesuits liked this · 1 year ago -

dorykitcat24 liked this · 1 year ago

dorykitcat24 liked this · 1 year ago -

spatcat liked this · 1 year ago

spatcat liked this · 1 year ago -

earthquakening liked this · 1 year ago

earthquakening liked this · 1 year ago -

sigmanta reblogged this · 1 year ago

sigmanta reblogged this · 1 year ago -

sigmanta liked this · 1 year ago

sigmanta liked this · 1 year ago -

combustiblelemon reblogged this · 1 year ago

combustiblelemon reblogged this · 1 year ago -

naamoosh liked this · 1 year ago

naamoosh liked this · 1 year ago -

fedorasaurus liked this · 1 year ago

fedorasaurus liked this · 1 year ago -

randomhoohaas reblogged this · 1 year ago

randomhoohaas reblogged this · 1 year ago -

unclebaldfragrantbeauty reblogged this · 1 year ago

unclebaldfragrantbeauty reblogged this · 1 year ago -

alic0rez liked this · 1 year ago

alic0rez liked this · 1 year ago -

figmentforms liked this · 1 year ago

figmentforms liked this · 1 year ago -

catribone liked this · 1 year ago

catribone liked this · 1 year ago -

eagle-claws liked this · 1 year ago

eagle-claws liked this · 1 year ago -

i-am-the-shadowstorm liked this · 1 year ago

i-am-the-shadowstorm liked this · 1 year ago -

mugbearerscorner reblogged this · 1 year ago

mugbearerscorner reblogged this · 1 year ago -

mugbearerscorner liked this · 1 year ago

mugbearerscorner liked this · 1 year ago -

dragonoffantasyandreality liked this · 1 year ago

dragonoffantasyandreality liked this · 1 year ago -

siren-darkocean reblogged this · 1 year ago

siren-darkocean reblogged this · 1 year ago -

siren-darkocean liked this · 1 year ago

siren-darkocean liked this · 1 year ago -

bee-fox liked this · 1 year ago

bee-fox liked this · 1 year ago -

crazytac liked this · 1 year ago

crazytac liked this · 1 year ago -

leota-nexus liked this · 1 year ago

leota-nexus liked this · 1 year ago -

steampaul liked this · 1 year ago

steampaul liked this · 1 year ago -

dericbindel reblogged this · 1 year ago

dericbindel reblogged this · 1 year ago -

dericbindel liked this · 1 year ago

dericbindel liked this · 1 year ago -

whippeywhippey liked this · 1 year ago

whippeywhippey liked this · 1 year ago -

reallybigsquid reblogged this · 1 year ago

reallybigsquid reblogged this · 1 year ago