From Vision To Hand Action

From vision to hand action

Our hands are highly developed grasping organs that are in continuous use. Long before we stir our first cup of coffee in the morning, our hands have executed a multitude of grasps. Directing a pen between our thumb and index finger over a piece of paper with absolute precision appears as easy as catching a ball or operating a doorknob. The neuroscientists Stefan Schaffelhofer and Hansjörg Scherberger of the German Primate Center (DPZ) have studied how the brain controls the different grasping movements. In their research with rhesus macaques, it was found that the three brain areas AIP, F5 and M1 that are responsible for planning and executing hand movements, perform different tasks within their neural network. The AIP area is mainly responsible for processing visual features of objects, such as their size and shape. This optical information is translated into motor commands in the F5 area. The M1 area is ultimately responsible for turning this motor commands into actions. The results of the study contribute to the development of neuroprosthetics that should help paralyzed patients to regain their hand functions (eLife, 2016).

The three brain areas AIP, F5 and M1 lay in the cerebral cortex and form a neural network responsible for translating visual properties of an object into a corresponding hand movement. Until now, the details of how this “visuomotor transformation” are performed have been unclear. During the course of his PhD thesis at the German Primate Center, neuroscientist Stefan Schaffelhofer intensively studied the neural mechanisms that control grasping movements. “We wanted to find out how and where visual information about grasped objects, for example their shape or size, and motor characteristics of the hand, like the strength and type of a grip, are processed in the different grasp-related areas of the brain”, says Schaffelhofer.

For this, two rhesus macaques were trained to repeatedly grasp 50 different objects. At the same time, the activity of hundreds of nerve cells was measured with so-called microelectrode arrays. In order to compare the applied grip types with the neural signals, the monkeys wore an electromagnetic data glove that recorded all the finger and hand movements. The experimental setup was designed to individually observe the phases of the visuomotor transformation in the brain, namely the processing of visual object properties, the motion planning and execution. For this, the scientists developed a delayed grasping task. In order for the monkey to see the object, it was briefly lit before the start of the grasping movement. The subsequent movement took place in the dark with a short delay. In this way, visual and motor signals of neurons could be examined separately.

The results show that the AIP area is primarily responsible for the processing of visual object features. “The neurons mainly respond to the three-dimensional shape of different objects”, says Stefan Schaffelhofer. “Due to the different activity of the neurons, we could precisely distinguish as to whether the monkeys had seen a sphere, cube or cylinder. Even abstract object shapes could be differentiated based on the observed cell activity.”

In contrast to AIP, area F5 and M1 did not represent object geometries, but the corresponding hand configurations used to grasp the objects. The information of F5 and M1 neurons indicated a strong resemblance to the hand movements recorded with the data glove. “In our study we were able to show where and how visual properties of objects are converted into corresponding movement commands”, says Stefan Schaffelhofer. “In this process, the F5 area plays a central role in visuomotor transformation. Its neurons receive direct visual object information from AIP and can translate the signals into motor plans that are then executed in M1. Thus, area F5 has contact to both, the visual and motor part of the brain.”

Knowledge of how to control grasp movements is essential for the development of neuronal hand prosthetics. “In paraplegic patients, the connection between the brain and limbs is no longer functional. Neural interfaces can replace this functionality”, says Hansjörg Scherberger, head of the Neurobiology Laboratory at the DPZ. “They can read the motor signals in the brain and use them for prosthetic control. In order to program these interfaces properly, it is crucial to know how and where our brain controls the grasping movements”. The findings of this study will facilitate to new neuroprosthetic applications that can selectively process the areas’ individual information in order to improve their usability and accuracy.

More Posts from Science-is-magical and Others

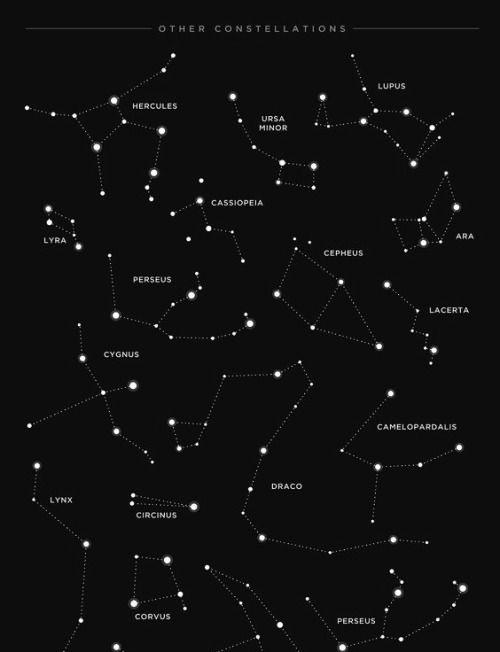

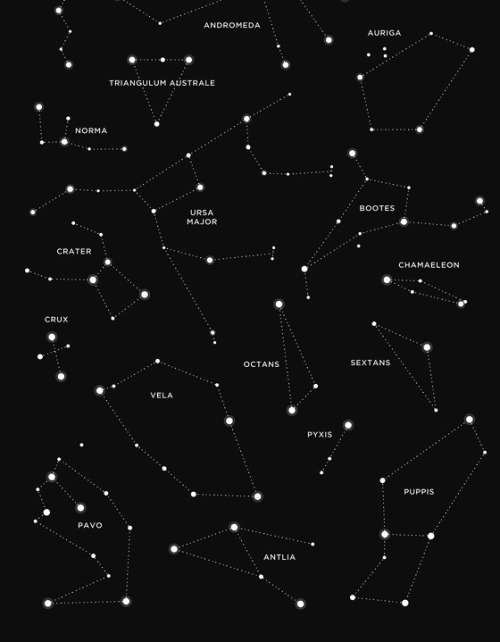

A group of astronomers is using a new method to search for hard to spot alien planets: By measuring the difference between the amount of light coming from the planets’ daysides and nightsides, astronomers have spotted 60 new worlds thus far.

Continue Reading.

Monkey sees… monkey knows?

Socrates is often quoted as having said, “I know that I know nothing.” This ability to know what you know or don’t know—and how confident you are in what you think you know—is called metacognition.

When asked a question, a human being can decline to answer if he knows that he does not know the answer. Although non-human animals cannot verbally declare any sort of metacognitive judgments, Jessica Cantlon, an assistant professor of brain and cognitive sciences at Rochester, and PhD candidate Stephen Ferrigno, have found that non-human primates exhibit a metacognitive process similar to humans. Their research on metacognition is part of a larger enterprise of figuring out whether non-human animals are “conscious” in the human sense.

In a paper published in Proceedings of the Royal Society B, they report that monkeys, like humans, base their metacognitive confidence level on fluency—how easy something is to see, hear, or perceive. For example, humans are more confident that something is correct, trustworthy, or memorable—even if this may not be the case—if it is written in a larger font.

“Humans have a variety of these metacognitive illusions—false beliefs about how they learn or remember best,” Cantlon says.

Because other primate species exhibit metacognitive illusions like humans do, the researchers believe this cognitive ability could have an evolutionary basis. Cognitive abilities that have an evolutionary basis are likely to emerge early in development.

“Studying metacognition in non-human primates could give us a foothold for how to study metacognition in young children,” Cantlon says. “Understanding the most basic and primitive forms of metacognition is important for predicting the circumstances that lead to good versus poor learning in human children.”

Cantlon and Ferrigno determined that non-human primates exhibited metacognitive illusions after they observed primates completing a series of steps on a computer:

The monkey touches a start screen.

He sees a picture, which is the sample. The goal is to remember that sample because he will be tested on this later. The monkey touches the sample to move to the next screen.

The next screen shows the sample picture among some distractors. The monkey must touch the image he has seen before.

Instead of getting a reward right away—to eliminate decisions based purely on response-reward—the monkey next sees a betting screen to communicate how certain he is that he’s right. If he chooses a high bet and is correct, three tokens are added to a token bank. Once the token bank is full, the monkey gets a treat. If he gets the task incorrect and placed a high bet, he loses three tokens. If he placed a low bet, he gets one token regardless if he is right or wrong.

Researchers manipulated the fluency of the images, first making them easier to see by increasing the contrast (the black image), then making them less fluent by decreasing the contrast (the grey image).

The monkeys were more likely to place a high bet, meaning they were more confident that they knew the answer, when the contrast of the images was increased.

“Fluency doesn’t affect actual memory performance,” Ferrigno says. “The monkeys are just as likely to get an answer right or wrong. But this does influence how confident they are in their response.”

Since metacognition can be incorrect through metacognitive illusion, why then have humans retained this ability?

“Metacognition is a quick way of making a judgment about whether or not you know an answer,” Ferrigno says. “We show that you can exploit and manipulate metacognition, but, in the real world, these cues are actually pretty good most of the time.”

Take the game of Jeopardy, for example. People press the buzzer more quickly than they could possibly arrive at an answer. Higher fluency cues, such as shorter, more common, and easier-to-pronounce words, allow the mind to make snap judgments about whether or not it thinks it knows the answer, even though it’s too quick for it to actually know.

Additionally, during a presentation, a person presented with large amounts of information can be fairly confident that the title of a lecture slide, written in a larger font, will be more important to remember than all the smaller text below.

“This is the same with the monkeys,” Ferrigno says. “If they saw the sample picture well and it was easier for them to encode, they will be more confident in their answer and will bet high.”

hey guys i think i got a pretty nice tan over the summer, what do you think?

before:

after:

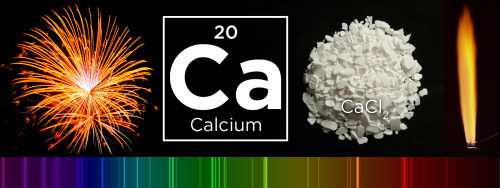

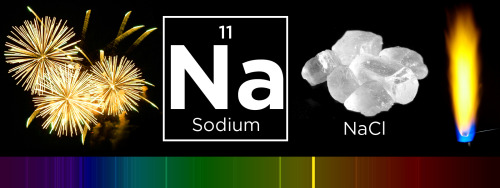

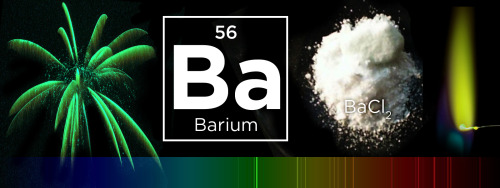

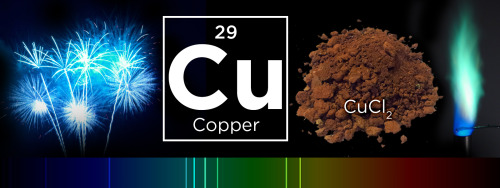

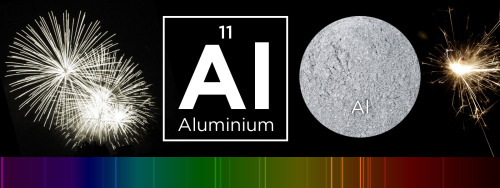

What makes fireworks colorful?

It’s all thanks to the luminescence of metals. When certain metals are heated (over a flame or in a hot explosion) their electrons jump up to a higher energy state. When those electrons fall back down, they emit specific frequencies of light - and each chemical has a unique emission spectrum.

You can see that the most prominent bands in the spectra above match the firework colors. The colors often burn brighter with the addition of an electron donor like Chlorine (Cl).

But the metals alone wouldn’t look like much. They need to be excited. Black powder (mostly nitrates like KNO3) provides oxygen for the rapid reduction of charcoal © to create a lot hot expanding gas - the BOOM. That, in turn, provides the energy for luminescence - the AWWWW.

Aluminium has a special role — it emits a bright white light … and makes sparks!

Images: Charles D. Winters, Andrew Lambert Photography / Science Source, iStockphoto, Epic Fireworks, Softyx, Mark Schellhase, Walkerma, Firetwister, Rob Lavinsky, iRocks.com, Søren Wedel Nielsen

New theory explains how beta waves arise in the brain

Beta rhythms, or waves of brain activity with an approximately 20 Hz frequency, accompany vital fundamental behaviors such as attention, sensation and motion and are associated with some disorders such as Parkinson’s disease. Scientists have debated how the spontaneous waves emerge, and they have not yet determined whether the waves are just a byproduct of activity, or play a causal role in brain functions. Now in a new paper led by Brown University neuroscientists, they have a specific new mechanistic explanation of beta waves to consider.

The new theory, presented in the Proceedings of the National Academy of Sciences, is the product of several lines of evidence: external brainwave readings from human subjects, sophisticated computational simulations and detailed electrical recordings from two mammalian model organisms.

“A first step to understanding beta’s causal role in behavior or pathology, and how to manipulate it for optimal function, is to understand where it comes from at the cellular and circuit level,” said corresponding author Stephanie Jones, research associate professor of neuroscience at Brown University. “Our study combined several techniques to address this question and proposed a novel mechanism for spontaneous neocortical beta. This discovery suggests several possible mechanisms through which beta may impact function.”

Making waves

The team started by using external magnetoencephalography (MEG) sensors to observe beta waves in the human somatosensory cortex, which processes sense of touch, and the inferior frontal cortex, which is associated with higher cognition.

They closely analyzed the beta waves, finding they lasted at most a mere 150 milliseconds and had a characteristic wave shape, featuring a large, steep valley in the middle of the wave.

The question from there was what neural activity in the cortex could produce such waves. The team attempted to recreate the waves using a computer model of a cortical circuitry, made up of a multilayered cortical column that contained multiple cell types across different layers. Importantly, the model was designed to include a cell type called pyramidal neurons, whose activity is thought to dominate the human MEG recordings.

They found that they could closely replicate the shape of the beta waves in the model by delivering two kinds of excitatory synaptic stimulation to distinct layers in the cortical columns of cells: one that was weak and broad in duration to the lower layers, contacting spiny dendrites on the pyramidal neurons close to the cell body; and another that was stronger and briefer, lasting 50 milliseconds (i.e., one beta period), to the upper layers, contacting dendrites farther away from the cell body. The strong distal drive created the valley in the waveform that determined the beta frequency.

Meanwhile they tried to model other hypotheses about how beta waves emerge, but found those unsuccessful.

With a model of what to look for, the team then tested it by looking for a real biological correlate of it in two animal models. The team analyzed measurements in the cortex of mice and rhesus macaques and found direct confirmation that this kind of stimulation and response occurred across the cortical layers in the animal models.

“The ultimate test of the model predictions is to record the electrical signals inside the brain,” Jones said. “These recordings supported our model predictions.”

Beta in the brain

Neither the computer models nor the measurements traced the source of the excitatory synaptic stimulations that drive the pyramidal neurons to produce the beta waves, but Jones and her co-authors posit that they likely come from the thalamus, deeper in the brain. Projections from the thalamus happen to be in exactly the right places needed to deliver signals to the right positions on the dendrites of pyramidal neurons in the cortex. The thalamus is also known to send out bursts of activity that last 50 milliseconds, as predicted by their theory.

With a new biophysical theory of how the waves emerge, the researchers hope the field can now investigate whether beta rhythms affect or merely reflect behavior and disease. Jones’s team in collaboration with Professor of Neuroscience Christopher Moore at Brown is now testing predictions from the theory that beta may decrease sensory or motor information processing functions in the brain. New hypotheses are that the inputs that create beta may also stimulate inhibitory neurons in the top layers of the cortex, or that they may may saturate the activity of the pyramidal neurons, thereby reducing their ability to process information; or that the thalamic bursts that give rise to beta occupy the thalamus to the point where it doesn’t pass information along to the cortex.

Figuring this out could lead to new therapies based on manipulating beta, Jones said.

“An active and growing field of neuroscience research is trying to manipulate brain rhythms for optimal function with stimulation techniques,” she said. “We hope that our novel finding on the neural origin of beta will help guide research to manipulate beta, and possibly other rhythms, for improved function in sensorimotor pathologies.”

-

pleasurehunter2000 liked this · 4 years ago

pleasurehunter2000 liked this · 4 years ago -

science-is-magical reblogged this · 8 years ago

science-is-magical reblogged this · 8 years ago -

snorkel91 liked this · 8 years ago

snorkel91 liked this · 8 years ago -

thoths-foundry-blog reblogged this · 8 years ago

thoths-foundry-blog reblogged this · 8 years ago -

mullercells reblogged this · 8 years ago

mullercells reblogged this · 8 years ago -

mullercells liked this · 8 years ago

mullercells liked this · 8 years ago -

pinakingohilabefsblog liked this · 8 years ago

pinakingohilabefsblog liked this · 8 years ago -

mysterysciencegirlfriend3000 reblogged this · 8 years ago

mysterysciencegirlfriend3000 reblogged this · 8 years ago -

crazyfiregalaxy reblogged this · 8 years ago

crazyfiregalaxy reblogged this · 8 years ago -

anitharajastro liked this · 8 years ago

anitharajastro liked this · 8 years ago -

bugdi-blog liked this · 8 years ago

bugdi-blog liked this · 8 years ago -

generalcreationtidalwave-blog liked this · 8 years ago

generalcreationtidalwave-blog liked this · 8 years ago -

thespringdreamer-blog2 reblogged this · 8 years ago

thespringdreamer-blog2 reblogged this · 8 years ago -

spartan-031 liked this · 8 years ago

spartan-031 liked this · 8 years ago -

ask-danand-phil liked this · 8 years ago

ask-danand-phil liked this · 8 years ago -

ciel62 liked this · 8 years ago

ciel62 liked this · 8 years ago -

classygurrl-blog liked this · 8 years ago

classygurrl-blog liked this · 8 years ago -

doomroar liked this · 8 years ago

doomroar liked this · 8 years ago -

spooksohana liked this · 8 years ago

spooksohana liked this · 8 years ago -

brittaniahart liked this · 8 years ago

brittaniahart liked this · 8 years ago -

frodmon liked this · 8 years ago

frodmon liked this · 8 years ago -

igeoaiseukeurimyeyo reblogged this · 8 years ago

igeoaiseukeurimyeyo reblogged this · 8 years ago -

saagai liked this · 8 years ago

saagai liked this · 8 years ago -

moe-apieceoffreedom reblogged this · 8 years ago

moe-apieceoffreedom reblogged this · 8 years ago -

jolly-to-the-sunset liked this · 8 years ago

jolly-to-the-sunset liked this · 8 years ago -

klin0936-blog liked this · 8 years ago

klin0936-blog liked this · 8 years ago -

michellemajors reblogged this · 8 years ago

michellemajors reblogged this · 8 years ago -

michellemajors liked this · 8 years ago

michellemajors liked this · 8 years ago -

leanna-lynn-little liked this · 8 years ago

leanna-lynn-little liked this · 8 years ago -

boardgamebrony liked this · 8 years ago

boardgamebrony liked this · 8 years ago -

parktrippin reblogged this · 8 years ago

parktrippin reblogged this · 8 years ago -

parktrippin liked this · 8 years ago

parktrippin liked this · 8 years ago -

wakaan-74-blog reblogged this · 8 years ago

wakaan-74-blog reblogged this · 8 years ago -

wakaan-74-blog liked this · 8 years ago

wakaan-74-blog liked this · 8 years ago