Be Greater Than Average.

Be greater than average.

More Posts from Science-is-magical and Others

New technique captures the activity of an entire brain in a snapshot

When it comes to measuring brain activity, scientists have tools that can take a precise look at a small slice of the brain (less than one cubic millimeter), or a blurred look at a larger area. Now, researchers at The Rockefeller University have described a new technique that combines the best of both worlds—it captures a detailed snapshot of global activity in the mouse brain.

(Image caption: Sniff, sniff: This density map of the cerebral cortex of a mouse shows which neurons get activated when the animal explores a new environment. The lit up region at the center (white and yellow) represents neurons associated with the mouse’s whiskers)

“We wanted to develop a technique that would show you the level of activity at the precision of a single neuron, but at the scale of the whole brain,” says study author Nicolas Renier, a postdoctoral fellow in the lab of Marc Tessier-Lavigne, Carson Family Professor and head of the Laboratory of Brain Development and Repair, and president of Rockefeller University.

The new method, described in Cell, takes a picture of all the active neurons in the brain at a specific time. The mouse brain contains dozens of millions of neurons, and a typical image depicts the activity of approximately one million neurons, says Tessier-Lavigne. “The purpose of the technique is to accelerate our understanding of how the brain works.”

Making brains transparent

“Because of the nature of our technique, we cannot visualize live brain activity over time—we only see neurons that are active at the specific time we took the snapshot,” says Eliza Adams, a graduate student in Tessier-Lavigne’s lab and co-author of the study. “But what we gain in this trade-off is a comprehensive view of most neurons in the brain, and the ability to compare these active neuronal populations between snapshots in a robust and unbiased manner.”

Here’s how the tool works: The researchers expose a mouse to a situation that would provoke altered brain activity—such as taking an anti-psychotic drug, brushing whiskers against an object while exploring, and parenting a pup—then make the measurement after a pause. The pause is important, explains Renier, because the technique measures neuron activity indirectly, via the translation of neuronal genes into proteins, which takes about 30 minutes to occur.

The researchers then treat the brain to make it transparent—following an improved version of a protocol called iDISCO, developed by Zhuhao Wu, a postdoctoral associate in the Tessier-Lavigne lab—and visualize it using light-sheet microscopy, which takes the snapshot of all active neurons in 3-D.

To determine where an active neuron is located within the brain, Christoph Kirst, a fellow in Rockefeller’s Center for Studies in Physics and Biology, developed software to detect the active neurons and to automatically map the snapshot to a 3-D atlas of the mouse brain, generated by the Allen Brain Institute.

Although each snapshot of brain activity typically includes about one million active neurons, researchers can sift through that mass of data relatively quickly if they compare one snapshot to another snapshot, says Renier. By eliminating the neurons that are active in both images, researchers are left only those specific to each one, enabling them to home in on what is unique to each state.

Observing and testing how the brain works

The primary purpose of the tool, he adds, is to help researchers generate hypotheses about how the brain functions that then can be tested in other experiments. For instance, using their new techniques, the researchers, in collaboration with Catherine Dulac and other scientists at Harvard University, observed that when an adult mouse encounters a pup, a region of its brain known to be active during parenting—called the medial pre-optic nucleus, or MPO—lights up. But they also observed that, after the MPO area becomes activated, there is less activity in the cortical amygdala, an area that processes aversive responses, which they found to be directly connected to the MPO “parenting region.”

“Our hypothesis,” says Renier, “is that parenting neurons put the brake on activity in the fear region, which may suppress aversive responses the mice may have towards pups.” Indeed, mice that are being aggressive to pups tend to show more activity in the cortical amygdala.

To test this idea, the next step is to block the activity of this brain region to see if this reduces aggression in the mice, says Renier.

The technique also has broader implications than simply looking at what areas of the mouse brain are active in different situations, he adds. It could be used to map brain activity in response to any biological change, such as the spread of a drug or disease, or even to explore how the brain makes decisions. “You can use the same strategy to map anything you want in the mouse brain,” says Renier.

dude seeing these Mega high quality images of the surface of mars that we now have has me fucked up. Like. Mars is a place. mars is a real actual place where one could hypothetically stand. It is a physical place in the universe. ITS JUST OUT THERE LOOKING LIKE UH IDK A REGULAR OLD DESERT WITH LOTS OF ROCKS BUT ITS A WHOLE OTHER PLANET?

On Oct 31, 2015 scientists used radar imaging to photograph a little dead comet. Much to their surprise, it looked quite a lot like a skull! Due to that and the timing, they nicknamed it Death Comet.

Death Comet will swing by us again this year (albeit a little later than last time).

You can read more about the Death Comet here: https://www.universetoday.com/140108/the-death-comet-will-pass-by-earth-just-after-halloween/

Happy Halloween, all!!! 💀🎃

Possible case for fifth force of nature

A team of physicists at the University of California has uploaded a paper to the arXiv preprint server in which they suggest that work done by a team in Hungary last year might have revealed the existence of a fifth force of nature. Their paper has, quite naturally, caused quite a stir in the physics community as several groups have set a goal of reproducing the experiments conducted by the team at the Hungarian Academy of Science’s Institute for Nuclear Research.

The work done by the Hungarian team, led by Attila Krasznahorkay, examined the possible existence of dark photons - the analog of conventional photons but that work with dark matter. They shot protons at lithium-7 samples creating beryllium-8 nuclei, which, as it decayed, emitted pairs of electrons and positrons. Surprisingly, as they monitored the emitted pairs, instead of a consistent drop-off, there was a slight bump, which the researchers attributed to the creation of an unknown particle with a mass of approximately 17 MeV. The team uploaded their results to the arXiv server, and their paper was later published by Physical Review Letters. It attracted very little attention until the team at UoC uploaded their own paper suggesting that the new particle found by the Hungarian team was not a dark photon, but was instead possibly a protophobic X boson, which they further suggested might carry a super-short force which acts over just the width of an atomic nucleus - which would mean that it is a force that is not one of the four described as the fundamental forces that underlie modern physics.

The paper uploaded by the UoC team has created some excitement, as well as public exclamations of doubt - reports of the possibility of a fifth force of nature have been heard before, but none have panned out. But still, the idea is intriguing enough that several teams have announced plans to repeat the experiments conducted by the Hungarian team, and all eyes will be on the DarkLight experiments at the Jefferson Laboratory, where a team is also looking for evidence of dark photons - they will be shooting electrons at gas targets looking for anything with masses between 10 and 100 MeV, and now more specifically for those in the 17 MeV region. What they find, or don’t, could prove whether an elusive fifth force of nature actually exists, within a year’s time. [Image][Continue Reading→]

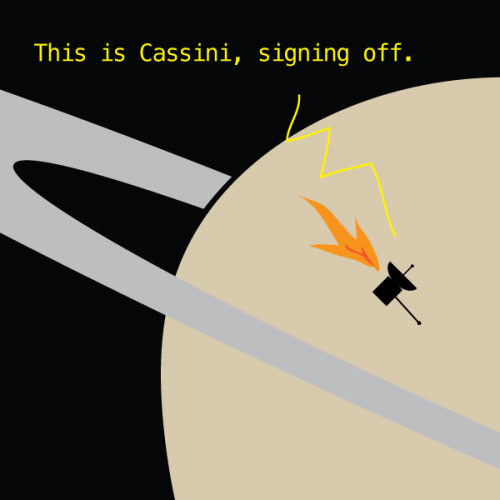

Friday, Cassini will dive into Saturn’s atmosphere and put an end to its nearly 20 year mission. Over those years we learned an incredible amount of information about Saturn, its rings, and its many moons. During the grand finale, Cassini will continue to send back information about Saturns atmosphere before burning up like a shooting star.

How Printing a 3-D Skull Helped Save a Real One

What started as a stuffy-nose and mild cold symptoms for 15-year-old Parker Turchan led to a far more serious diagnosis: a rare type of tumor in his nose and sinuses that extended through his skull near his brain.

“He had always been a healthy kid, so we never imagined he had a tumor,” says Parker’s father, Karl. “We didn’t even know you could get a tumor in the back of your nose.”

The Portage, Michigan, high school sophomore was referred to the University of Michigan’s C.S. Mott Children’s Hospital, where doctors determined the tumor extended so deep that it was beyond what regular endoscopy could see.

The team members needed to get the best representation of the tumor’s extent to ensure that their surgical approach could successfully remove the entire mass

“Parker had an uncommon, large, high-stage tumor in a very challenging area,” says Mott pediatric head and neck surgeon David Zopf, M.D. “The tumor’s location and size had me question whether a minimally invasive approach would allow us to remove the tumor completely.”

To help answer that question, teams at Mott sought an innovative approach: crafting a 3-D replica of Parker’s skull.

The model, made of polylactic acid, helped simulate the coming operation on Parker by giving U-M surgeons “an exact replica of his craniofacial anatomy and a way to essentially touch the ‘tumor’ with our hands ahead of time,” Zopf says.

Just as important, it also allowed the team to counsel Parker and his family by offering them a look at what lurked within — and, with the test run successfully complete, what would lie ahead.

A ‘pretty impressive’ model

The rare and aggressive tumor in Parker’s nose is known as juvenile nasopharyngeal angiofibroma, a mass that grows in the back of the nasal cavity and predominantly affects young male teens. Mott sees a handful of cases each year.

In Parker’s case, the tumor had two large parts: one roughly the size of an egg and the other the size of a kiwi. The mass sat right in the center of the craniofacial skeleton below the brain and next to the nerves that control eye movement and vision.

“We were obviously concerned about the risks involved in this kind of procedure, which we knew could lead to a lot of blood loss and was sensitive because it was so close to the nerves in his face,” says Karl, who praised the 3-D methodology used to aid his son. “It was pretty impressive to see the model of Parker’s skull ahead of the surgery. We had no idea this was even possible.”

Zopf, working with Erin McKean, M.D., a U-M skull base surgeon, was able to completely remove the large tumor. Kyle VanKoevering, M.D., and Sajad Arabnejad, Ph.D., aided in model preparation.

Through preoperative embolization, the blood supply to the tumor was blocked off the day before surgery to decrease blood loss. A large portion of the tumor was then detached endoscopically and removed through the mouth. The remaining mass under the brain was taken out through the nose.

Doctors took pictures of Parker’s anatomy during the surgery and, later, compared it with pictures from the model. They were nearly identical.

“Words alone can’t express how thankful we are for Parker’s talented team of surgeons at Mott,” says his mother, Heidi. “Parker is back to his old self again.”

Powerful potential

Although medical application of the technology continues to gain attention, it isn’t entirely new. Zopf and Mott teams have used 3-D printing for almost five years.

Groundbreaking 3-D printed splints made at U-M have helped save the lives of babies with severe tracheobronchomalacia, which causes the windpipe to periodically collapse and prevents normal breathing. Mott has also used 3-D printing on a fetus to plan for a potentially complicated birth.

“We are finding more and more uses for 3-D printing in medicine,” Zopf says. “It is proving to be a powerful tool that will allow for enhanced patient care.”

Based on success in patients such as Parker and continued collaboration, it’s a concept that appears poised to thrive.

“Because of the team approach we’ve established at the University of Michigan between otolaryngology and biomedical engineering, the printed models can be designed and rapidly produced at a very low cost,” Zopf says. “Michigan is one of only a few places in the nation and world that has the capacity to do this.”

Blind people gesture (and why that’s kind of a big deal)

People who are blind from birth will gesture when they speak. I always like pointing out this fact when I teach classes on gesture, because it gives us an an interesting perspective on how we learn and use gestures. Until now I’ve mostly cited a 1998 paper from Jana Iverson and Susan Goldin-Meadow that analysed the gestures and speech of young blind people. Not only do blind people gesture, but the frequency and types of gestures they use does not appear to differ greatly from how sighted people gesture. If people learn gesture without ever seeing a gesture (and, most likely, never being shown), then there must be something about learning a language that means you get gestures as a bonus.

Blind people will even gesture when talking to other blind people, and sighted people will gesture when speaking on the phone - so we know that people don’t only gesture when they speak to someone who can see their gestures.

Earlier this year a new paper came out that adds to this story. Şeyda Özçalışkan, Ché Lucero and Susan Goldin-Meadow looked at the gestures of blind speakers of Turkish and English, to see if the *way* they gestured was different to sighted speakers of those languages. Some of the sighted speakers were blindfolded and others left able to see their conversation partner.

Turkish and English were chosen, because it has already been established that speakers of those languages consistently gesture differently when talking about videos of items moving. English speakers will be more likely to show the manner (e.g. ‘rolling’ or bouncing’) and trajectory (e.g. ‘left to right’, ‘downwards’) together in one gesture, and Turkish speakers will show these features as two separate gestures. This reflects the fact that English ‘roll down’ is one verbal clause, while in Turkish the equivalent would be yuvarlanarak iniyor, which translates as two verbs ‘rolling descending’.

Since we know that blind people do gesture, Özçalışkan’s team wanted to figure out if they gestured like other speakers of their language. Did the blind Turkish speakers separate the manner and trajectory of their gestures like their verbs? Did English speakers combine them? Of course, the standard methodology of showing videos wouldn’t work with blind participants, so the researchers built three dimensional models of events for people to feel before they discussed them.

The results showed that blind Turkish speakers gesture like their sighted counterparts, and the same for English speakers. All Turkish speakers gestured significantly differently from all English speakers, regardless of sightedness. This means that these particular gestural patterns are something that’s deeply linked to the grammatical properties of a language, and not something that we learn from looking at other speakers.

References

Jana M. Iverson & Susan Goldin-Meadow. 1998. Why people gesture when they speak. Nature, 396(6708), 228-228.

Şeyda Özçalışkan, Ché Lucero and Susan Goldin-Meadow. 2016. Is Seeing Gesture Necessary to Gesture Like a Native Speaker? Psychological Science 27(5) 737–747.

Asli Ozyurek & Sotaro Kita. 1999. Expressing manner and path in English and Turkish: Differences in speech, gesture, and conceptualization. In Twenty-first Annual Conference of the Cognitive Science Society (pp. 507-512). Erlbaum.

-

amiscellaneouscollaboration liked this · 5 months ago

amiscellaneouscollaboration liked this · 5 months ago -

tonteri2 reblogged this · 5 months ago

tonteri2 reblogged this · 5 months ago -

foto4youreyesonly liked this · 1 year ago

foto4youreyesonly liked this · 1 year ago -

championbuttmaster liked this · 1 year ago

championbuttmaster liked this · 1 year ago -

missrupy liked this · 2 years ago

missrupy liked this · 2 years ago -

eternalhappiness liked this · 3 years ago

eternalhappiness liked this · 3 years ago -

designsousinfluences reblogged this · 3 years ago

designsousinfluences reblogged this · 3 years ago -

enigmatic-vision reblogged this · 4 years ago

enigmatic-vision reblogged this · 4 years ago -

tonteri2 reblogged this · 4 years ago

tonteri2 reblogged this · 4 years ago -

folky60 reblogged this · 4 years ago

folky60 reblogged this · 4 years ago -

fab55 liked this · 4 years ago

fab55 liked this · 4 years ago -

yesros3things liked this · 4 years ago

yesros3things liked this · 4 years ago -

me-inna-nutshell reblogged this · 4 years ago

me-inna-nutshell reblogged this · 4 years ago -

mudturttle liked this · 4 years ago

mudturttle liked this · 4 years ago -

onlydreaming liked this · 4 years ago

onlydreaming liked this · 4 years ago -

kawiza reblogged this · 4 years ago

kawiza reblogged this · 4 years ago -

kawiza liked this · 4 years ago

kawiza liked this · 4 years ago -

sins-of-an-angel reblogged this · 4 years ago

sins-of-an-angel reblogged this · 4 years ago -

sins-of-an-angel liked this · 4 years ago

sins-of-an-angel liked this · 4 years ago -

mr-wizard liked this · 4 years ago

mr-wizard liked this · 4 years ago -

chiawawa07 reblogged this · 5 years ago

chiawawa07 reblogged this · 5 years ago -

jadestanton liked this · 5 years ago

jadestanton liked this · 5 years ago -

magont liked this · 6 years ago

magont liked this · 6 years ago -

musingsofmistressg liked this · 6 years ago

musingsofmistressg liked this · 6 years ago -

marthasuncion liked this · 6 years ago

marthasuncion liked this · 6 years ago -

sizzilinfajita reblogged this · 6 years ago

sizzilinfajita reblogged this · 6 years ago -

hkdroids liked this · 7 years ago

hkdroids liked this · 7 years ago -

mischievousnightowl reblogged this · 7 years ago

mischievousnightowl reblogged this · 7 years ago -

transformer-en-chaos reblogged this · 7 years ago

transformer-en-chaos reblogged this · 7 years ago -

random-empress reblogged this · 7 years ago

random-empress reblogged this · 7 years ago -

astrostargirl liked this · 7 years ago

astrostargirl liked this · 7 years ago -

mirror-vicit-omnia liked this · 7 years ago

mirror-vicit-omnia liked this · 7 years ago -

ae8 liked this · 7 years ago

ae8 liked this · 7 years ago -

notoriousrsg liked this · 7 years ago

notoriousrsg liked this · 7 years ago