*chirp*

*chirp*

you mean to tell me there are people who don't make little creature noises on a daily basis? wild

More Posts from Jarich and Others

I feel this

It really is wild that some politicians can stand there and say "yeah we're getting rid of a program that keeps quite literally millions of people alive specifically so we can cut taxes for people who are already richer than god" as if it's a normal political stance and not so cartoonishly evil I'm legit shocked perry the platypus doesn't break through the nearest wall the minute the words leave their mouth.

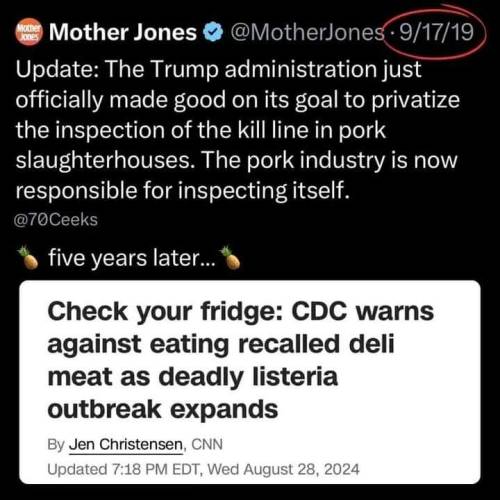

It's almost as if removing regulations will result in consumer health and safety suffering. Who would have guessed?!

![Screenshot of a news article from The Economic Times, published/last updated Feb 17, 2025 6:06pm.

Title: Newly launched DOGE website hacked: Classified information released?

Synopsis: Elon Musk had [said, sic] earlier that DOGE would be the most transparent government organisation ever.](https://64.media.tumblr.com/0e5329aeab537a45f7ba2cea5ffdacda/0e78cd678c9b54f9-c7/s640x960/ea743a21ff077911d62604cdfd0dc54d4f423d8f.png)

I don't think that "we don't know anything about hosting an even moderately secure website, we haven't secured our database and we don't know how to protect classified information" is the kind of "most transparent government organisation ever" that we want.

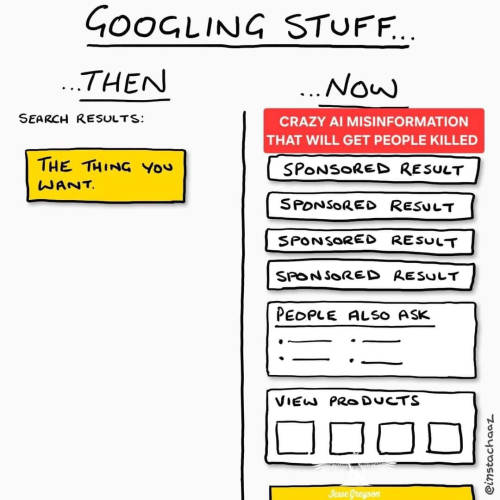

I'm in tech and I agree that there are some things that LLMs can do better (and certainly faster) than I can.

1. Provide workable solutions to well-described (but fairly straightforward) problems. For example "using jq (a json query language tool) take two json files and combine them in this manner...."

2. Identify and fix format issues: "what changes are required to make this string valid json?"

3. Doing boring chores. "Using this sample data, suggest a well normalised database structure. Write a script that creates a Postgres database, and creates the tables decided above. Write a second script that accepts json objects that look like EXAMPLE and adds them into the database."

However, while there is a risk my employer will decide that LLMs can reduce the workforce significantly, 99% of what I do can't be done by LLMs yet and I can't see how that would change.

LLMs have the ability to draw on the expertise and documentation created by millions of people. They can synthesise that knowledge to provide answers to fairly casually askef questions. But they have no *understanding* of the content they're synthesising, which is why they can't give correct answers to questions like "what is 2+2?" or "how many times does the letter r appear in strawberry?" Those questions require *understanding* of the premise of the question. "Infer, based on hundreds of millions of pages of documentation and examples, how to use this tool to do that thing" is a much easier ask.

The other thing about having no understanding is that they can't create anything truly new. They can create new art in the style of the grand masters, compose music, write stories... But only in a derivative sense. LLMs possess no mind, so they can't *imagine* anything. Users who use LLMs to realise their own art are missing out on the value of learning how to create their art themselves. Just as I am missing out on the value of learning how to use the tool jq to manipulate json files which would enable me to answer my own question.

LLMs have such a large environmental footprint, that they're morally dubious at best. It should be alarming that LLM proponents are telling us to just use these tools without worrying about the environment, because we aren't doing enough to fix climate change anyway. "Leave solving the future to LLMs?!" LLMs aren't going to solve climate change, they're incapable of *understanding* and *innovating*. We already know how to save ourselves from climate change, but the wealthy and powerful don't want to because it would require them to be less rich and powerful.

The trillion dollar problem is literally "how do we change our current society such that leadership requires the ability to lead, a commitment to listen to experts and does not result in the leader getting buckets of money from bribes and lobbying?" preferably without destroying the supply chain and killing hundreds of thousands.

so like I said, I work in the tech industry, and it's been kind of fascinating watching whole new taboos develop at work around this genAI stuff. All we do is talk about genAI, everything is genAI now, "we have to win the AI race," blah blah blah, but nobody asks - you can't ask -

What's it for?

What's it for?

Why would anyone want this?

I sit in so many meetings and listen to genuinely very intelligent people talk until steam is rising off their skulls about genAI, and wonder how fast I'd get fired if I asked: do real people actually want this product, or are the only people excited about this technology the shareholders who want to see lines go up?

like you realize this is a bubble, right, guys? because nobody actually needs this? because it's not actually very good? normal people are excited by the novelty of it, and finance bro capitalists are wetting their shorts about it because they want to get rich quick off of the Next Big Thing In Tech, but the novelty will wear off and the bros will move on to something else and we'll just be left with billions and billions of dollars invested in technology that nobody wants.

and I don't say it, because I need my job. And I wonder how many other people sitting at the same table, in the same meeting, are also not saying it, because they need their jobs.

idk man it's just become a really weird environment.

There really isn't that tight a correlation between egg size and adult size. I mean we're not expecting something big, but some of the biggest of these came out of mid to small eggs. It's so cool!

Bugs & Bunnies

Anyway

When there's something in particular that you're spiralling on (for example a recent breakup, something not going your way etc), that's a great opportunity to learn poems or songs. Whenever you find yourself re-entering the spiral, recite/sing the poems/songs instead. Try to see how much you remember before rechecking your source material. It will help.

Honestly it boils down to reparenting yourself & rewiring your own neuronal pathways & telling yourself a firm “stop” when you notice your mind slipping down negative loopholes & being present in the moment & enjoying being mid task rather than waiting for it to end & not thinking of inertia as your baseline and natural way of living

-

bellesword reblogged this · 1 week ago

bellesword reblogged this · 1 week ago -

bellesword liked this · 1 week ago

bellesword liked this · 1 week ago -

sillygoose538 liked this · 1 week ago

sillygoose538 liked this · 1 week ago -

soksanghae liked this · 1 week ago

soksanghae liked this · 1 week ago -

overthinkingspark-blue reblogged this · 1 week ago

overthinkingspark-blue reblogged this · 1 week ago -

overthinkingspark-blue liked this · 1 week ago

overthinkingspark-blue liked this · 1 week ago -

humilem reblogged this · 1 week ago

humilem reblogged this · 1 week ago -

dvrty-b reblogged this · 1 week ago

dvrty-b reblogged this · 1 week ago -

dvrty-b liked this · 1 week ago

dvrty-b liked this · 1 week ago -

1verre2trop-official liked this · 1 week ago

1verre2trop-official liked this · 1 week ago -

just-a-little-unionoid reblogged this · 1 week ago

just-a-little-unionoid reblogged this · 1 week ago -

zebinkter reblogged this · 1 week ago

zebinkter reblogged this · 1 week ago -

kwittermoth liked this · 1 week ago

kwittermoth liked this · 1 week ago -

babygirl-beldaruit liked this · 1 week ago

babygirl-beldaruit liked this · 1 week ago -

maximum-marvel reblogged this · 1 week ago

maximum-marvel reblogged this · 1 week ago -

maximum-marvel liked this · 1 week ago

maximum-marvel liked this · 1 week ago -

heart-of-the-morningstar liked this · 1 week ago

heart-of-the-morningstar liked this · 1 week ago -

pageofheartdj reblogged this · 1 week ago

pageofheartdj reblogged this · 1 week ago -

veradian2092 reblogged this · 1 week ago

veradian2092 reblogged this · 1 week ago -

leastactivejester reblogged this · 1 week ago

leastactivejester reblogged this · 1 week ago -

leastactivejester liked this · 1 week ago

leastactivejester liked this · 1 week ago -

deafsapphic reblogged this · 1 week ago

deafsapphic reblogged this · 1 week ago -

onesweetbeautifulsong liked this · 1 week ago

onesweetbeautifulsong liked this · 1 week ago -

international-repeater-station liked this · 1 week ago

international-repeater-station liked this · 1 week ago -

seo-m-e liked this · 1 week ago

seo-m-e liked this · 1 week ago -

peflavoredtictac liked this · 1 week ago

peflavoredtictac liked this · 1 week ago -

nixii-sabre reblogged this · 1 week ago

nixii-sabre reblogged this · 1 week ago -

el-ffej liked this · 1 week ago

el-ffej liked this · 1 week ago -

inalienable-wright liked this · 1 week ago

inalienable-wright liked this · 1 week ago -

pitblond liked this · 1 week ago

pitblond liked this · 1 week ago -

merfolkplantgay reblogged this · 1 week ago

merfolkplantgay reblogged this · 1 week ago -

nessteadart liked this · 1 week ago

nessteadart liked this · 1 week ago -

schwarzbewithyou88 liked this · 1 week ago

schwarzbewithyou88 liked this · 1 week ago -

luiza-starry-reblog-dump reblogged this · 1 week ago

luiza-starry-reblog-dump reblogged this · 1 week ago -

bucketof-joy reblogged this · 1 week ago

bucketof-joy reblogged this · 1 week ago -

bucketof-joy liked this · 1 week ago

bucketof-joy liked this · 1 week ago -

cockring-ken reblogged this · 1 week ago

cockring-ken reblogged this · 1 week ago -

satanic-gay-cat reblogged this · 1 week ago

satanic-gay-cat reblogged this · 1 week ago -

satanic-gay-cat liked this · 1 week ago

satanic-gay-cat liked this · 1 week ago -

ahoysnufkin reblogged this · 1 week ago

ahoysnufkin reblogged this · 1 week ago -

bayesic-bitch liked this · 1 week ago

bayesic-bitch liked this · 1 week ago -

rubbishbunny liked this · 1 week ago

rubbishbunny liked this · 1 week ago -

dustin-robert reblogged this · 1 week ago

dustin-robert reblogged this · 1 week ago -

wendrin reblogged this · 1 week ago

wendrin reblogged this · 1 week ago -

rogadron reblogged this · 1 week ago

rogadron reblogged this · 1 week ago -

rogadron liked this · 1 week ago

rogadron liked this · 1 week ago -

nandawool liked this · 1 week ago

nandawool liked this · 1 week ago -

wr-nix reblogged this · 1 week ago

wr-nix reblogged this · 1 week ago -

nthd-jen liked this · 1 week ago

nthd-jen liked this · 1 week ago -

greenwinv liked this · 1 week ago

greenwinv liked this · 1 week ago